Or in Amnesty International's case - why you should NOT use AI Images. Even if you do credit them to your robot.

Spare a thought for the Amnesty International Communications Team, who used AI-generated images to illustrate some social media posts. They were roundly criticised for doing so.

The issue was not that the images weren't good enough. They were. (Just).

The issue was not that the images weren't credited as AI images. They were.

The issue was not that they were shocking. They were.

In fact, they probably weren't shocking enough.

Amnesty used the AI images to promote their report on the brutal police response to Colombia's 2021 national strike, which left at least 38 civilians dead.

Where Amnesty International went wrong was thinking that they could mix facts (i.e. their reports) with fiction (AI-generated images).

The issue was that the AI-generated images were neither wholly factual, nor sufficiently illustrative. They looked real enough. But they were neither.

Amnesty's reputation relies on how they present facts.

That reputation is undermined by using faked images. Even though the images were credited as AI-generated.

The credit alone is not enough.

So how should campaigning organisations use AI tools like ChatGPT and Midjourney to tell their stories?

We were recently asked to run workshop for 30+ Communications Directors on how to use AI effectively in communications.

Many of those organisations are active in human rights, citizen advocacy and best practice across the EU.

Here are some of the key themes we covered:

Transparency is everything

Everything needs to be 100% accurate, or clearly fictional/representative. AI Image generators are good, but not that good.

At the moment - use them for graphics, for over-stylized depictions, but even with a disclaimer never use photo-realistic images in public, unless you are 100% confident they won't attract complaints.

Which, let's face it, is never.

Always check your AI's sources

ChatGPT famously makes up sources and data. It can't be trusted. So don't trust it. When you prompt it, be specific about the only sources that it can use.

But even so, if you ask ChatGPT to draft some bullet points for a speech or an Op-Ed, always go back to the sources it quotes yourself to be sure.

One of the Directors at the event explained how a journalist insisted they find a report that their organisation had published previously. The press team spent ages working with experts to search for the report. Which never actually existed. The journalist didn't believe them.

It was only when they press team asked the journalist if the report was cited by ChatGPT that the journalist believed them. Even though they had an extremely sounding name and url for the slug.

ChatGPT had literally made the whole thing up.

Don't upload anything sensitive to ChatGPT. Instead, only use public documents, including links to pdfs

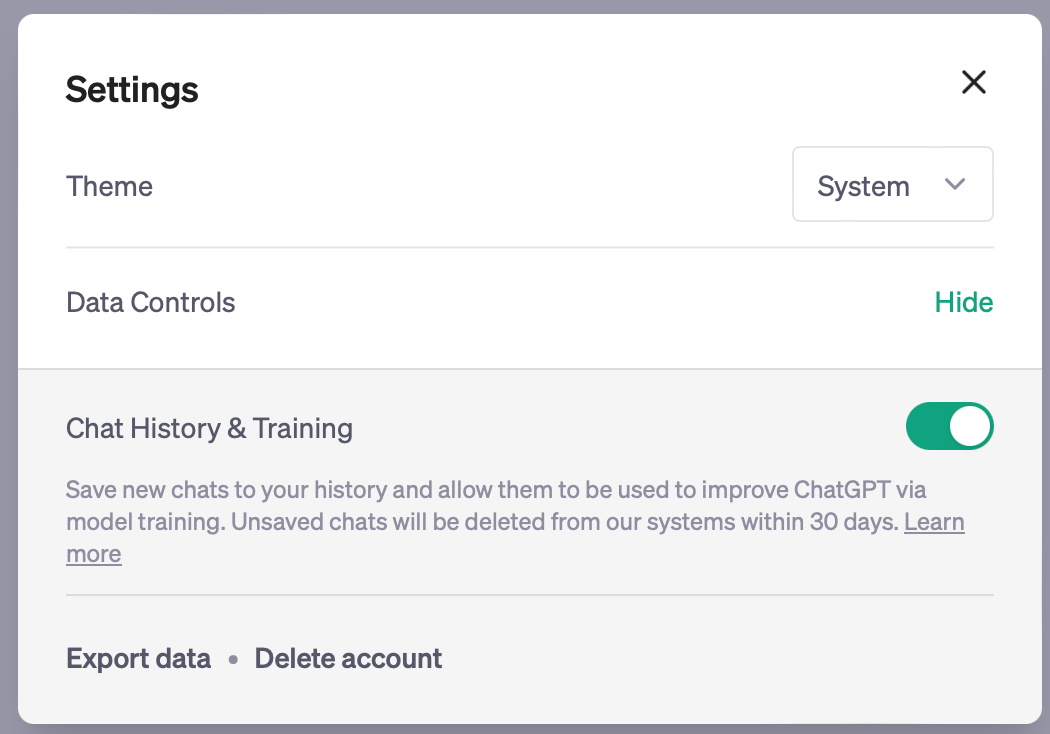

Unless you find and untick the "chat history and training" button, everything you upload gets ingested into the bowls of the beast. And then it can be presented to anyone who wants it.

While it might be tempting to ask ChatGPT to draft a speech, an op-ed, a blog post and a series of social posts based on a forthcoming report you can cut and paste into it, don't. Everything you type into it is considered fair game. And public.

In April, someone from Samsung uploaded sensitive data (as code to test). On 1 May the company banned ChatGPT entirely

The more specific you are with your prompt, the better

Obvious huh?

In fact, the session we were running started out being called: How to brief your agency. But it soon morphed into: How to train your robot. Because the two are extremely similar. A good ChatGPT prompt should always contain references to:

- Audience - who should ChatGPT behave like? And who is the output for?

- Call to action - what do you want the final audience to do, say, think or feel differently as a result?

- Channel - what format do you want the output in? Is it a speech, an Op-Ed, a blog post, a series of social posts, or a mix of all of the above?

- Source material - where specifically should ChatGPT look for answers? Ideally, share links to documents

- Tone and style - how formal/informal, serious, threatening, welcoming etc. etc. do you want the final output to be?

There are loads of 'prompt helpers' online. One we particularly like is ChainBrain AI. It uses ChatGPT to actually help you to write more detailed prompts. And then acts on them.

But whether you use a prompt helper or not, be as specific as you can possibly be with your prompts. Time spent writing the prompts is time saved editing.

How should you credit images created by AI tools like Midjourney or Dall-E?

There is no legal precedent (yet), but the smart money suggests that if you write the prompt, you own the copyright. That doesn't mean you need to credit the software used, or the 'prompt engineer'.

But, we would recommend that every image you use that is created by an AI tool is labelled as such.

And don't use an AI tool to try and depict a situation that a photojournalist could capture better. Because they (literally) are there. On the ground. Recording and reporting facts.

As Amnesty International learned the hard way.

We now include how to use AI tools within our communications training programmes, particularly for our writing training. Do get in touch if you would like to find out more.

We can even introduce you to our robot avatars.